A common bottleneck on busy OLTP applications is contention on the buffer cache. If too many sessions attempt to read the same blocks from the buffer cache, then they can queue up waiting for low level latches to be released before they can read the blocks from the cache.

There are two main block contention effects that can be experienced by an Oracle database and show up as database wait events:

Buffer Busy Waits

These are common in an I/O-bound Oracle system, as evidenced by any system with read (sequential/scattered) waits in the top-five waits. The two main cases where this wait can occur are:

- Another session is reading the block into the buffer

- Another session holds the buffer in an incompatible mode

Cache buffers chains latch waits (CBC)

This alternatively referred to as “hot block” contention. The cache buffers chains latches are used to protect a buffer list in the buffer cache. These latches are used when searching for, adding, or removing a buffer from the buffer cache. Blocks in the buffer cache are placed on linked lists (cache buffer chains) which hang off a hash table. The hash chain that a block is placed on is based on the DBA and CLASS of the block. Each hash chain is protected by a single child latch. Processes need to get the relevant latch to allow them to scan a hash chain for a buffer so that the linked list does not change underneath them. The two main cases where this wait can occur are:

- Very long buffer chains. Use multiple buffer pools to spread things out or use DB_BLOCK_LRU_LATCHES to increase the number of latches.

- Very heavy access to the same blocks. Typically it indicates a query that might need to be tuned (to access less blocks hence avoiding the collisions)

SQL with Excessive I/O

Block contention can be remedied by tuning bad SQL suffering excessive and un-necessary block reads, i.e. reduce the I/O. The things to look out for are:

- Check for SQL statements using unselective indexes (long index range scans), particularly with indexes with a poor clustering factor. (Clustering factor is a metric to indicate how contiguous the blocks on the underlying table when the indexed is scanned sequentially).

- Check for SQL using un-necessary large full table scans. Unnecessary large-table full-table scans consume a high amount of disk I/O that can drag-down the performance of the whole database. Small-table full table scans are acceptable, so long as these tables are cached in the KEEP pool. To evaluate whether a full table scan is large requires looking at the number of rows returned by a large-table full-table scan to determine if an index range scan will make the query run faster and with less disk I/O. In general, though it is impossible to define any specific guideline, a SQL query that accesses more than 40% of the rows in a table will run faster with a full-table scan, depending on the degree of parallelism for the table and the setting for db_file_multiblock_read_count. For those queries that return less than 40% of table rows, you should consider adding indexes and hints to remove the full-table scan and force an index range scan.

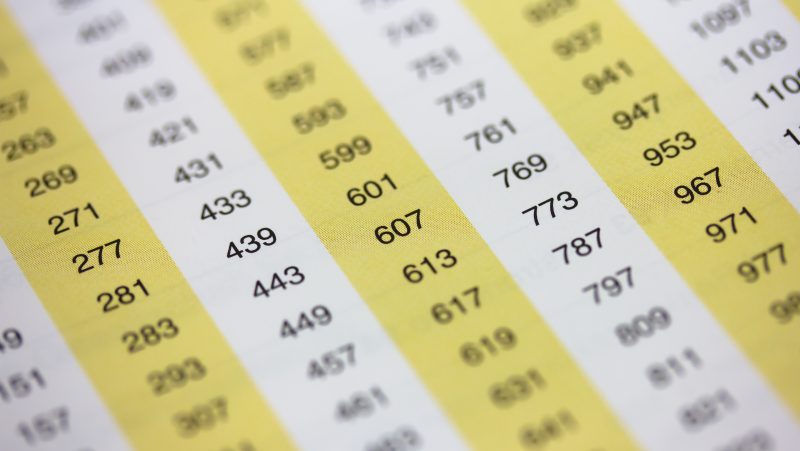

- Check for skewed indexes i.e. ones that insert serial numbers generated from a sequence. On an OLTP system this can force all the activity at the latest entries which will all be in the same blocks. A solution is to use reverse-key indexes as long as the index is not required for range scans.

- Check for small tables that are frequently accessed. E.g. a table has 10 rows, all 10 rows fit into a single block, and the block then gets hammered. A possible solution is to “alter table minimize records_per_block” (which is really intended for tables with many BITMAP indexes) and have just 1 or 2 records/block, or set PCTFREE really high to force one record per block.

- Check for tables that have a high level of concurrent inserts either from other sessions or through parallel processing. These will cause buffer busy waits on data blocks and can be resolved by increasing the number of FREELISTS for the table.

- Consider using Automatic Segment-Space Management (ASSM). It is a simpler and more efficient way of managing space within a segment and completely eliminates any need to specify and tune the PCTUSED, FREELISTS, and FREELIST GROUPS storage parameters.

- Consider increasing the INITRANS table/index storage parameter on segments suffering high write concurrency. The INITRANS setting controls Initial Transaction Slots (ITLs), where a transaction slot is required for any session that needs to modify a block on an object. The more concurrency there is on a block, then the higher this setting needs to be (up to a maximum of 255, the default for tables being 1, where the value is the expected concurrency on a block).

- Shrink segments that have a lot of empty blocks and subject to large full table scans (ultimately there should not be any large full table scans in an OLTP database). A table scan will always scan up to the High Water Mark (HWM) even if space is not used. Common offenders are process that repeatedly insert and delete data on a table, particularly via an INSERT /*+APPEND */ without a TRUNCATE … DISCARD STORAGE. Tablespaces with ASSM enabled will manage this automatically. NOTE: Segment shrink is not supported for tables with function-based indexes, domain indexes, or bitmap join indexes and the table must have ROW MOVEMENT enabled.

Conclusion

Many of the points discussed in this article can be pre-empted during database design and therefore avoid the database performance crises that can often hit a development project.